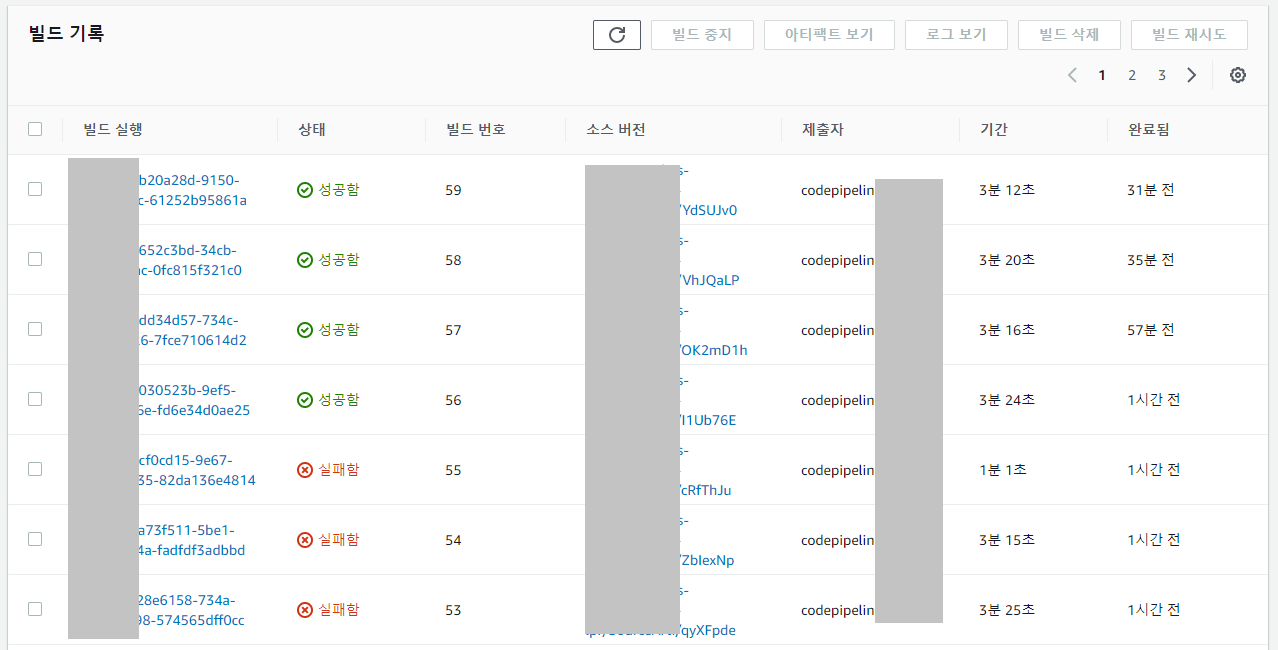

CodeBuild 빌드 중에 발생한 에러 모음이다.

빌드 프로젝트 구성 validation

: 빌드 프로젝트 새로 구성시 한번이라도 validation 에 실패하면 재검증시 터무니없는 메시지가 출력됨. 사실 권한 및 정책이 검증과정에서 이미 생성되는데 같은 이름의 권한 및 정책이 존재한다는 메시지가 올바르나... 아무튼 자동 생성된 권한 및 정책을 삭제해도 마찬가지이고... 권한 및 삭제 뒤 빌드 프로젝트를 한번에 새로 구성하면 해결; (한번이라도 검증 실패시 아래 메시지 조우)

서비스 역할 이름이 잘못되었습니다. 유효한 문자는 꼬부랑꼬부랑 !@#$ㄲㅉㄸㄲㅉ

S3 소스 다운로드시 권한 없음

: AmazonS3ReadOnlyAccess 권한 추가로 해결

Waiting for DOWNLOAD_SOURCE

AccessDenied: Access Denied

status code: 403, request id: VX7AKNM69P3N7123, host id: 123y3WpjIvTzVViVzHclfn4F3UfDg4mJA++8BSY6q0jQyEPCWr4cohc0dVJ+q08N/123LMNToEI= for primary source and source version arn:aws:s3:::source-artifact-temp/example/SourceArti/BwUEum1

buildspec.yml 경로 에러 발생

: 경로 확인으로 해결

Phase context status code: YAML_FILE_ERROR Message: stat /codebuild/output/src123816321/src/git-codecommit.ap-northeast-2.amazonaws.com/v1/repos/example/Api/buildspec.yml: no such file or directory

ecr 로그인 에러

: AmazonEC2ContainerRegistryPowerUser 권한 추가로 해결

Running command aws --version

aws-cli/1.20.58 Python/3.9.5 Linux/4.14.252-195.483.amzn2.x86_64 exec-env/AWS_ECS_EC2 botocore/1.21.58

Running command $(aws ecr get-login --region $AWS_DEFAULT_REGION --no-include-email)

An error occurred (AccessDeniedException) when calling the GetAuthorizationToken operation: User: arn:aws:sts::123151105123:assumed-role/exampleCodeBuildServiceRole/AWSCodeBuild-ccf3e123-4123-4123-9ba1-1231fc6797b8 is not authorized to perform: ecr:GetAuthorizationToken on resource: * because no identity-based policy allows the ecr:GetAuthorizationToken action

Command did not exit successfully $(aws ecr get-login --region $AWS_DEFAULT_REGION --no-include-email) exit status 255

Phase complete: PRE_BUILD State: FAILED

Phase context status code: COMMAND_EXECUTION_ERROR Message: Error while executing command: $(aws ecr get-login --region $AWS_DEFAULT_REGION --no-include-email). Reason: exit status 255

gradle 구문 에러

: 기본 gradle 버전 호환 오류로 스크립트 수정으로 해결 gradle build -> ./gradlew build

Running command gradle --version

------------------------------------------------------------

Gradle 5.6.4

------------------------------------------------------------

...

* What went wrong:

An exception occurred applying plugin request [id: 'org.springframework.boot', version: '2.6.0']

> Failed to apply plugin [id 'org.springframework.boot']

> Spring Boot plugin requires Gradle 6.8.x, 6.9.x, or 7.x. The current version is Gradle 5.6.4

Command did not exit successfully gradle :Api:build exit status 1

Phase complete: BUILD State: FAILED

Phase context status code: COMMAND_EXECUTION_ERROR Message: Error while executing command: gradle :Api:build. Reason: exit status 1

Dockerfile 경로 에러

: 경로 수정으로 해결 . -> ./AWS/Dockerfile

Running command docker build -t $REPOSITORY_URI:latest .

unable to prepare context: unable to evaluate symlinks in Dockerfile path: lstat /codebuild/output/src368940192/src/git-codecommit.ap-northeast-2.amazonaws.com/v1/repos/kps/Dockerfile: no such file or directory

eks cluster 조회 권한 에러

: AmazonEKSWorkerNodePolicy 권한 수정 및 바인딩 추가로 해결

Running command aws eks update-kubeconfig --region ap-northeast-2 --name example

An error occurred (AccessDeniedException) when calling the DescribeCluster operation: User: arn:aws:sts::123151105123:assumed-role/exampleCodeBuildServiceRole/AWSCodeBuild-123e955a-545c-42c6-8d21-67215aef0123 is not authorized to perform: eks:DescribeCluster on resource: arn:aws:eks:ap-northeast-2:123151105123:cluster/example

Command did not exit successfully aws eks update-kubeconfig --region ap-northeast-2 --name example exit status 255

Phase complete: INSTALL State: FAILED

Phase context status code: COMMAND_EXECUTION_ERROR Message: Error while executing command: aws eks update-kubeconfig --region ap-northeast-2 --name example. Reason: exit status 255

kubectl 권한 에러

: 사용자나 역할에 대한 aws-auth ConfigMap 수정으로 해결

Running command kubectl get po -n namespace1

error: You must be logged in to the server (Unauthorized)

Command did not exit successfully kubectl get po -n namespace1 exit status 1

Phase complete: INSTALL State: FAILED

Phase context status code: COMMAND_EXECUTION_ERROR Message: Error while executing command: kubectl get po -n namespace1. Reason: exit status 1Error from server (Forbidden): error when retrieving current configuration of:

Resource: "apps/v1, Resource=deployments", GroupVersionKind: "apps/v1, Kind=Deployment"

Name: "my-deploy", Namespace: "namespace1"

from server for: "./AWS/example-deployment.yaml": deployments.apps "my-deploy" is forbidden: User "system:node:AWSCodeBuild-fccb1123-1235-49c9-1238-b11cf05bc0c8" cannot get resource "deployments" in API group "apps" in the namespace "namespace1"Running command kubectl rollout restart -n example-namespace deployment my-deploy

error: failed to patch: deployments.apps "my-deploy" is forbidden: User "system:node:AWSCodeBuild-a0f27123-b1fc-4e12-1e3f-0eac123f5123" cannot patch resource "deployments" in API group "apps" in the namespace "namespace1"

Command did not exit successfully kubectl rollout restart -n namespace1 deployment my-deploy exit status 1

Phase complete: POST_BUILD State: FAILED

Phase context status code: COMMAND_EXECUTION_ERROR Message: Error while executing command: kubectl rollout restart -n namespace1 deployment my-deploy. Reason: exit status 1

- codebuild 에 사용된 역할 추가

$ kubectl edit configmap aws-auth -n kube-system

mapRoles: |

...

- rolearn: arn:aws:iam::123151105123:role/exampleCodeBuildServiceRole

username: system:node:{{SessionName}}

groups:

- system:bootstrappers

- system:nodes

- eks-console-dashboard-full-access-group

- 클러스터 역할 바인딩 생성

$ vi eks-console-full-access.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: eks-console-dashboard-full-access-clusterrole

rules:

- apiGroups:

- ""

resources:

- nodes

- namespaces

- pods

verbs:

- get

- list

- patch

- apiGroups:

- apps

resources:

- deployments

- daemonsets

- statefulsets

- replicasets

verbs:

- get

- list

- patch

- apiGroups:

- batch

resources:

- jobs

verbs:

- get

- list

- patch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: eks-console-dashboard-full-access-binding

subjects:

- kind: Group

name: eks-console-dashboard-full-access-group

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: ClusterRole

name: eks-console-dashboard-full-access-clusterrole

apiGroup: rbac.authorization.k8s.io

$ kubectl apply -f eks-console-full-access.yaml

clusterrole.rbac.authorization.k8s.io/eks-console-dashboard-full-access-clusterrole created

clusterrolebinding.rbac.authorization.k8s.io/eks-console-dashboard-full-access-binding created

WRITTEN BY

- 손가락귀신

정신 못차리면, 벌 받는다.